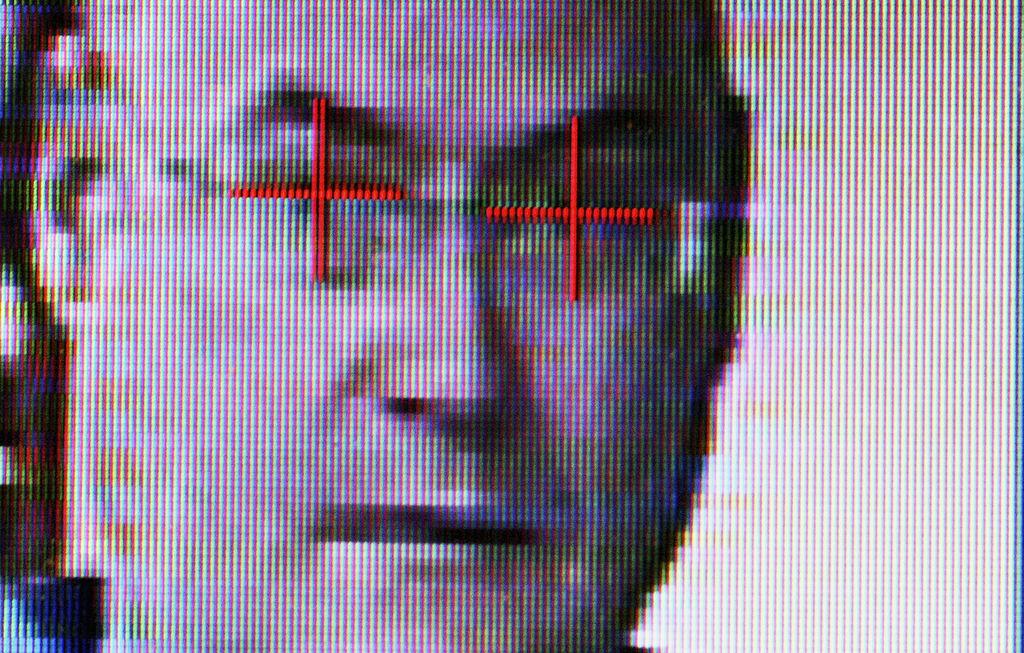

A senior peer has called for legislation to “drastically limit” the use of facial recognition technology (FRT) after chilling claims it is being used by stalkers and predators.

Baroness Jenny Jones told The Epoch Times that the government must put in place a “structure for scrutiny” over public and private use of citizens’ biometric data.