A week after the Australian government proposed new laws to target deepfake artificial intelligence (AI) images, police have arrested a teenage boy following a disturbing incident at a Melbourne private school.

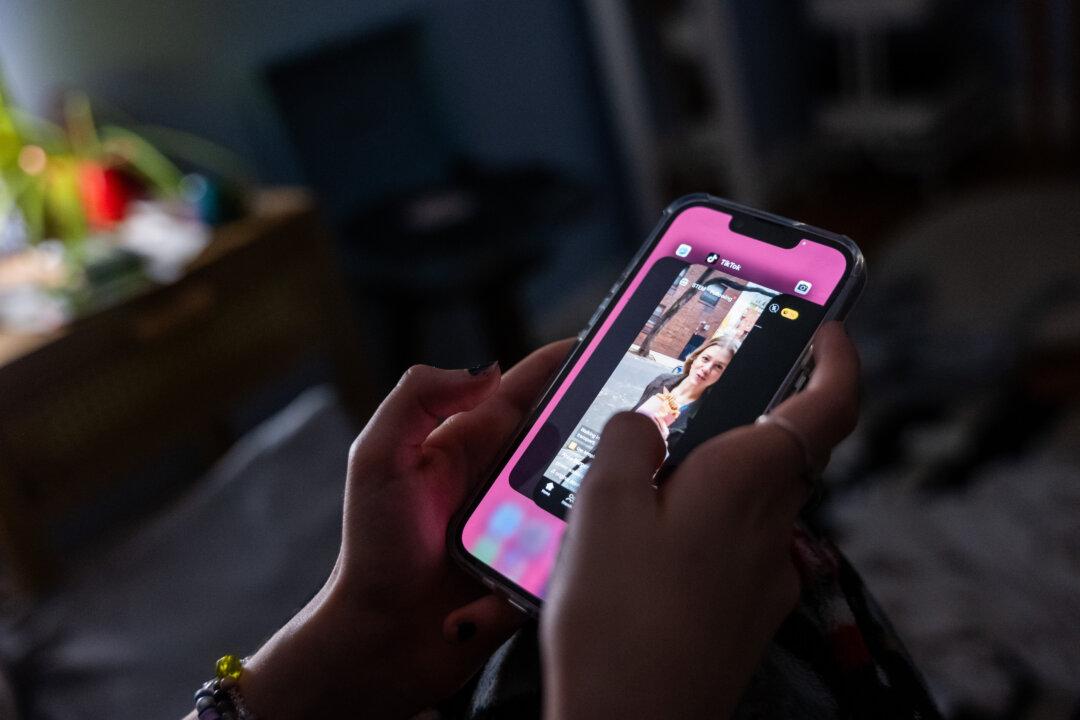

Around 50 female students were targeted, with AI being used to create fake computer-generated images and videos using photos of the girls’ faces.