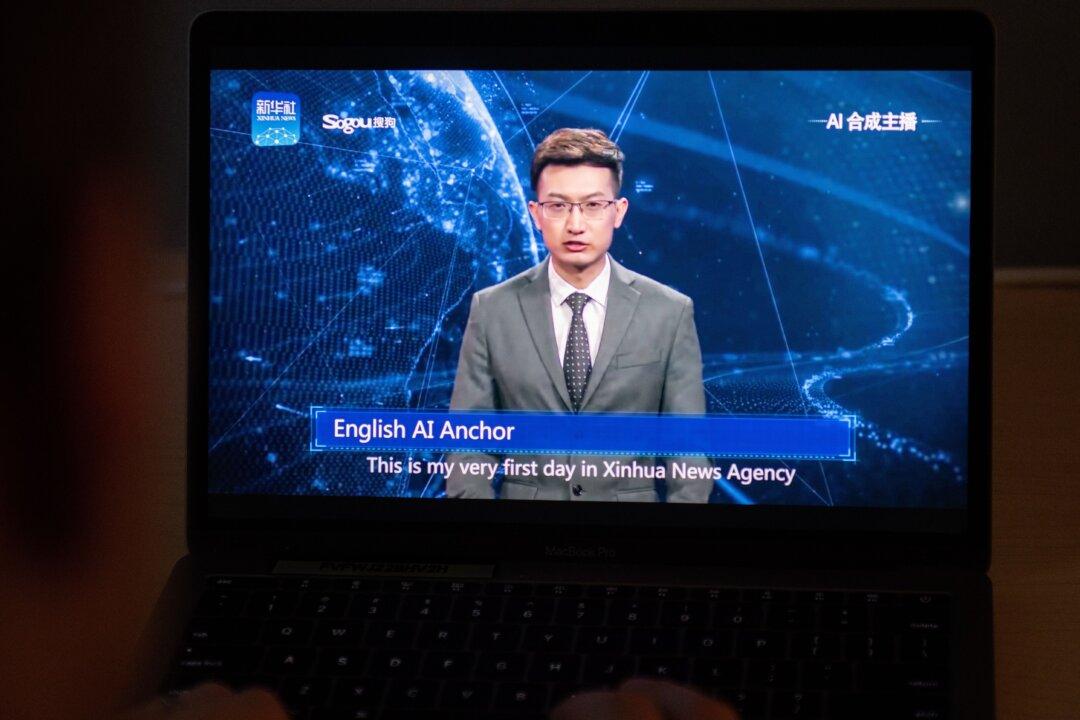

Artificial intelligence-generated deepfake news anchors are being used by Chinese state-aligned actors to promote pro-China propaganda videos on social media, according to a report published on Feb. 7.

The detailed report (pdf) by U.S.-based research firm Graphika marks the first time it has observed “state-aligned influence operation actors using video footage of AI-generated fictitious people in their operations.”