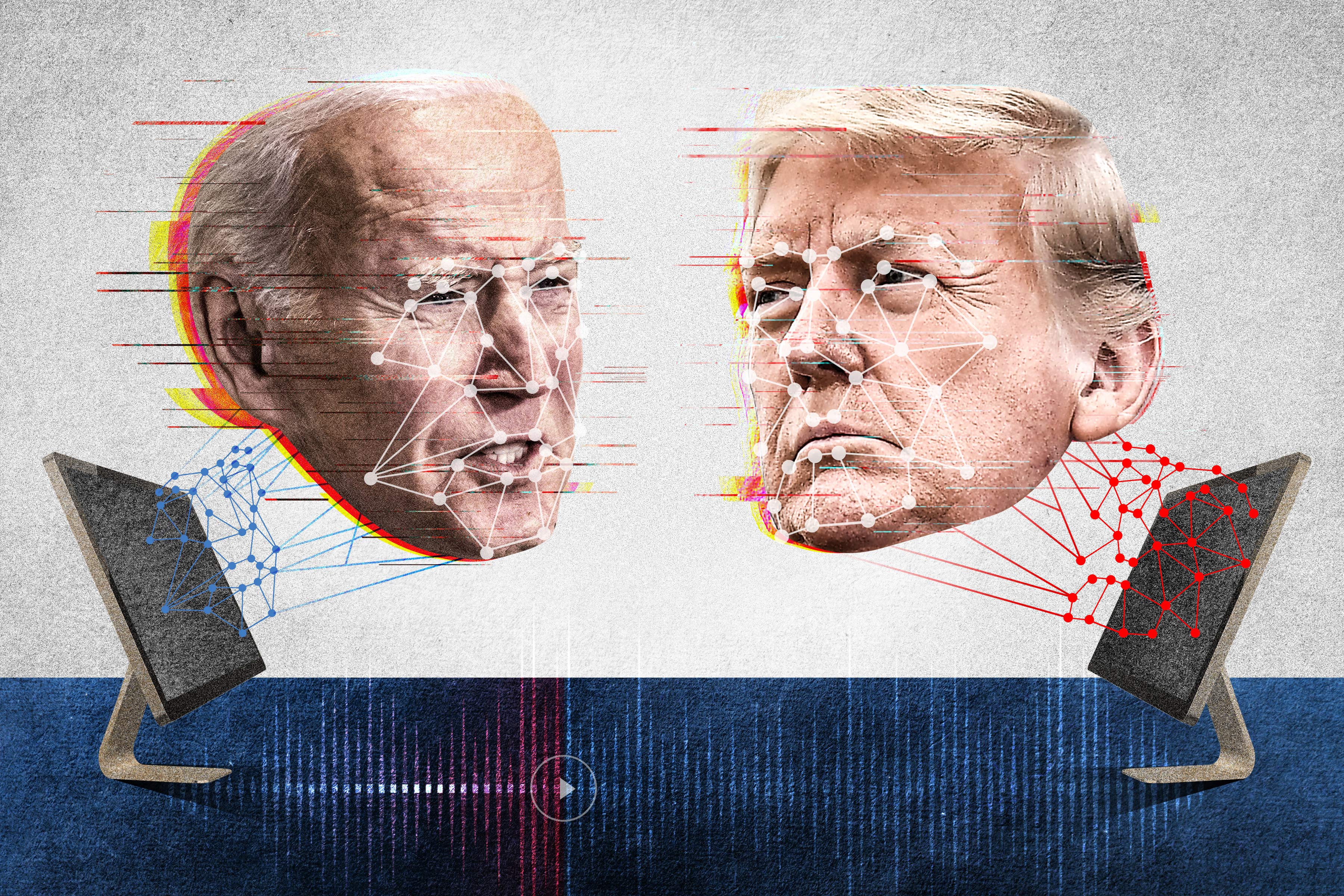

Artificial intelligence is now reaching a point where broadly accessible tools allow the synthetic creation of highly realistic materials—images, audio, and, increasingly, video sequences entirely generated by AI.

As the industry leaps forward and the human eye strains to tell real from artificial, some experts and entrepreneurs have scrambled to think up solutions.