The British government should introduce a system for recording the misuse and malfunctions of artificial intelligence (AI) in order to minimise long-term issues and dangers, according to a report from The Centre for Long-Term Resilience (CLTR) on Wednesday.

In a rapidly developing technological world, the report suggests a failure to implement the scheme could lead to the UK being ill prepared for major incidents, which could lead to detrimental harm on society.

It found that over 10,000 safety incidents involving active AI systems have been recorded since 2014. The AI incident database was compiled by the international research body, the Organisation for Economic Cooperation and Development (OECD).

A Critical Gap

The new report also identified a “critical gap” in the UK’s regulation of AI which could cause “widespread harm” to the British people if not adequately addressed.CLTR suggests that the UK’s Department for Science, Innovation and Technology (DSIT) will “lack visibility” on potential incidents within the UK government’s own use of AI in public services, noting an incident whereby the Dutch tax authorities used a defective system to detect benefits fraud, leaving 26,000 families in immediate financial distress.

The think tank also emphasised concerns about a lack of awareness around “disinformation campaigns and biological weapon development,” which it claims may require “urgent responses to protect UK citizens.”

“DSIT lacks a central, up-to-date picture of these types of incidents as they emerge,” says the report.

“Though some regulators will collect some incident reports, we find that this is not likely to capture the novel harms posed by frontier AI.”

‘Encouraging Steps’

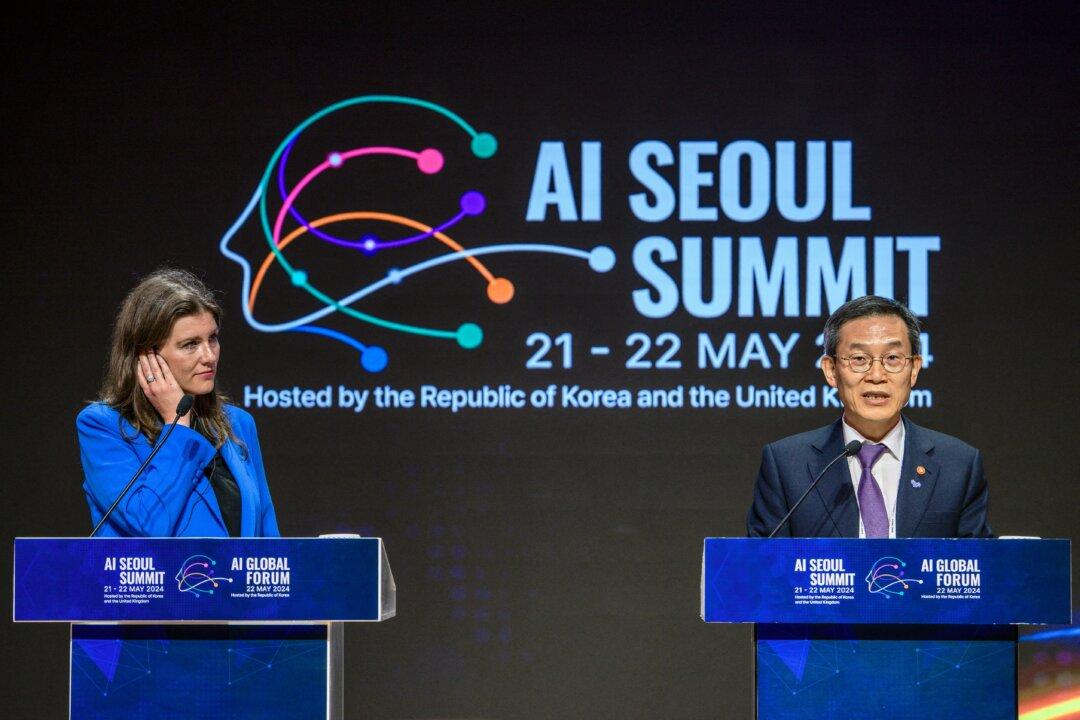

The release of the CLTR report comes as the UK prepares to go to the polls on July 4, with both major parties raising talking points about AI.On May 21, the UK, United States, European Union, and eight other countries reached an agreement to “accelerate the advancement of the science of AI safety” at the AI Seoul Summit. Global leaders committed to work together for “safer AI” and the advancement of “human well-being.”

Technology Secretary Michelle Donelan said in a press release at the conclusion of the summit that AI can only reach its full potential “if we are able to grip the risks posed by this rapidly evolving, complex technology.”

“Ever since we convened the world at Bletchley last year, the UK has spearheaded the global movement on AI safety and when I announced the world’s first AI Safety Institute, other nations followed this call to arms by establishing their own,” said Ms. Donelan.

Jessica Chapplow, founder of Heartificial Intelligence, told The Epoch Times by email that “while it’s encouraging to see significant steps being taken,” it’s vital that the government remains “vigilant and proactive.”

“The commitments from leading AI companies to adopt voluntary safety and transparency measures, particularly as seen during the first global AI Safety Summit hosted by the UK government, are indeed positive strides forward,” said Ms. Chapplow.

“Proactive steps taken by the government, such as the first global AI Safety Summit and the commitment to collaborate with the UK AI Safety Institute, highlight a recognition of the potential risks posed by advanced AI systems.”

“It is clear that as AI continues to advance at a rapid pace, a more distinct legislative and regulatory framework will become essential to address emerging risks and ensure that AI development aligns with societal values and safety standards,” she said.

The Epoch Times has contacted the DSIT for comment.