The proliferation of sexual AI (artificial intelligence) applications on smartphones has made it easier for perpetrators to commit offences, a parliamentary committee has been told.

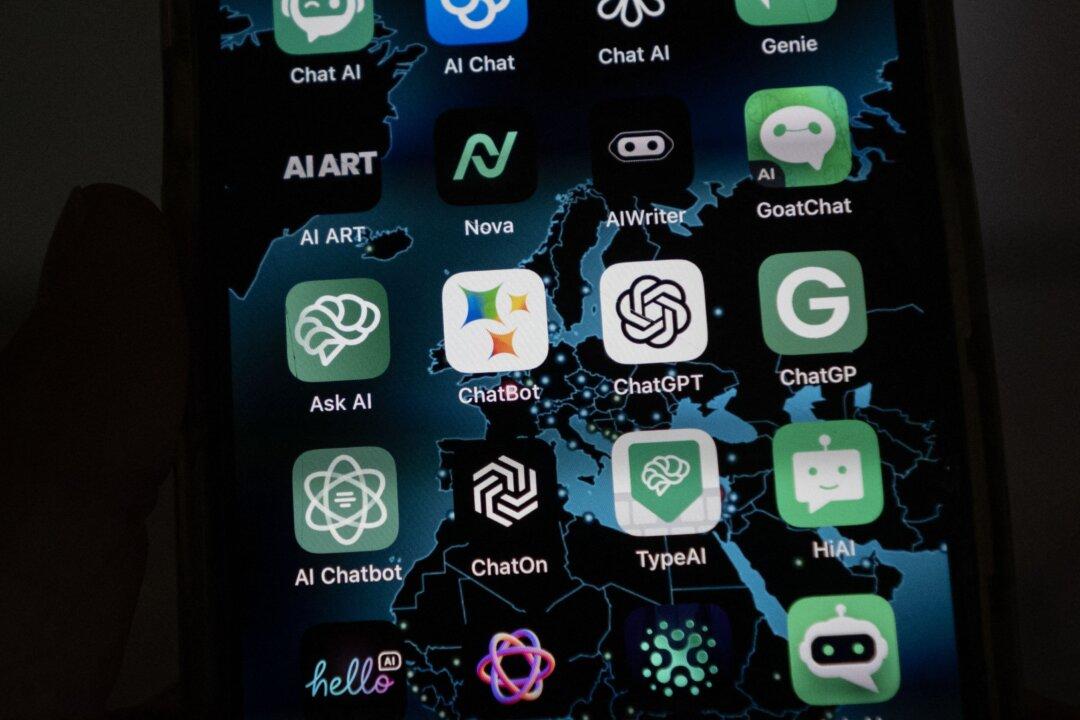

At a recent inquiry hearing on a new sexual deepfakes bill, eSafety Commissioner Julie Inman Grant said many apps designed for nefarious purposes were currently available in app stores.