Growing cases of child sexual abuse material being distributed online are prompting calls to crack down on perpetrators by removing the veil of anonymity users have when signing up to social media accounts.

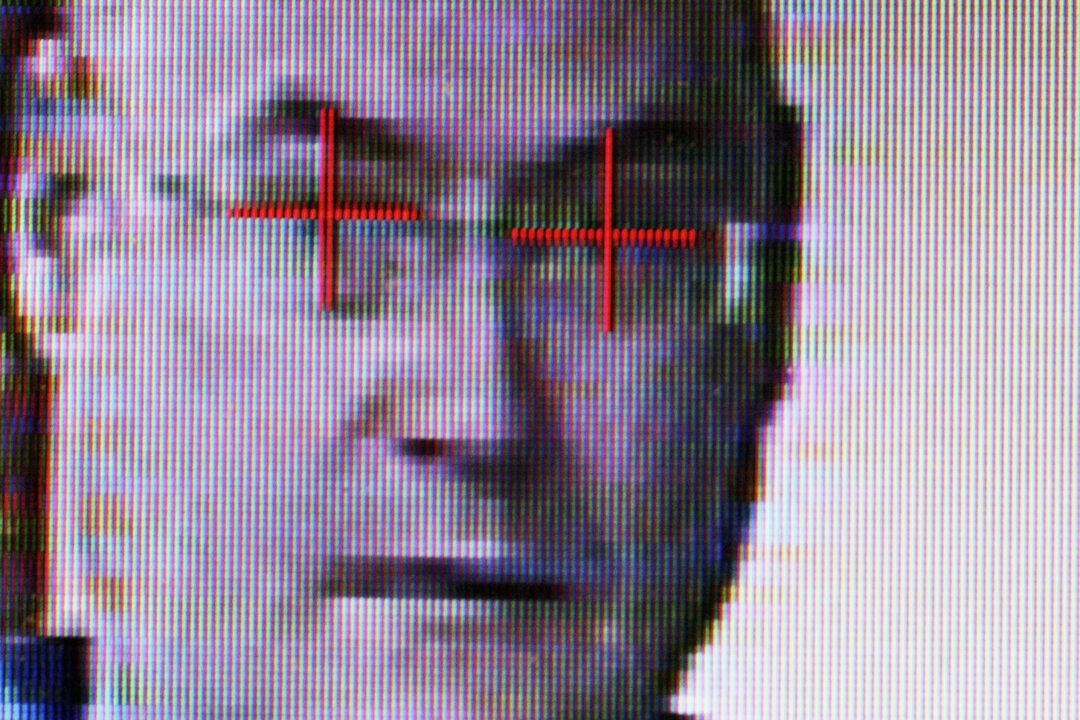

In an inquiry into the capability of law enforcement to tackle child exploitation, Uniting Church senior social justice advocate Mark Zirnsak said it was extremely difficult for police to track down those committing horrendous crimes against children.