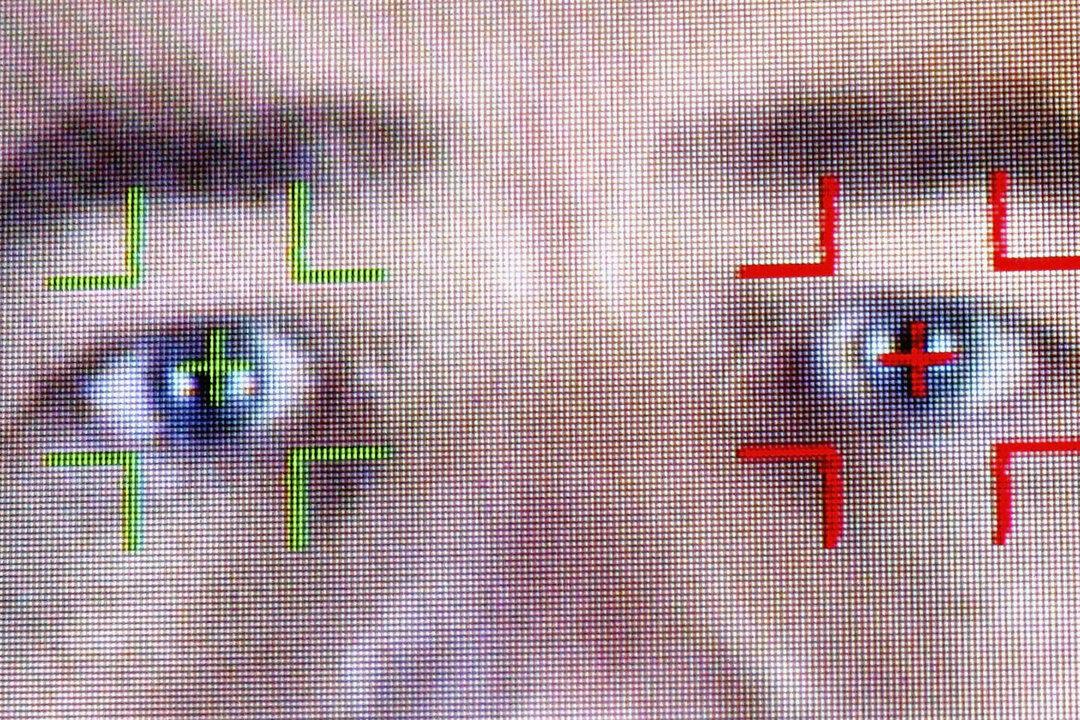

LONDON—Facial-recognition technology similar to that used in authoritarian countries such as China had a two-day trial by police on the streets of London.

The Metropolitan Police scanned the faces of Christmas shoppers in the central London shopping districts of Soho, Piccadilly Circus, and Leicester Square on Dec. 17 and 18.