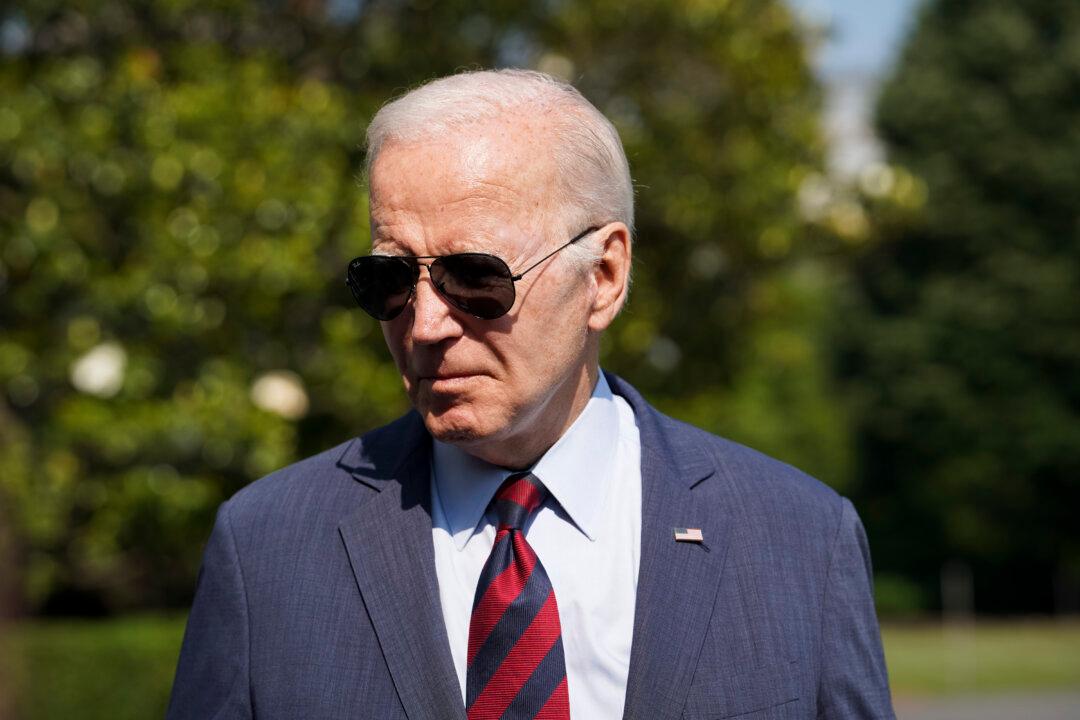

The Biden administration has announced new voluntary commitments from seven major artificial intelligence (AI) companies, another milestone in the White House’s attempt to get ahead of the fast-moving technology.

“This is pushing the envelope on what companies are doing and raising the standards for safety and security and trust of AI,” a senior White House official told reporters on July 20.