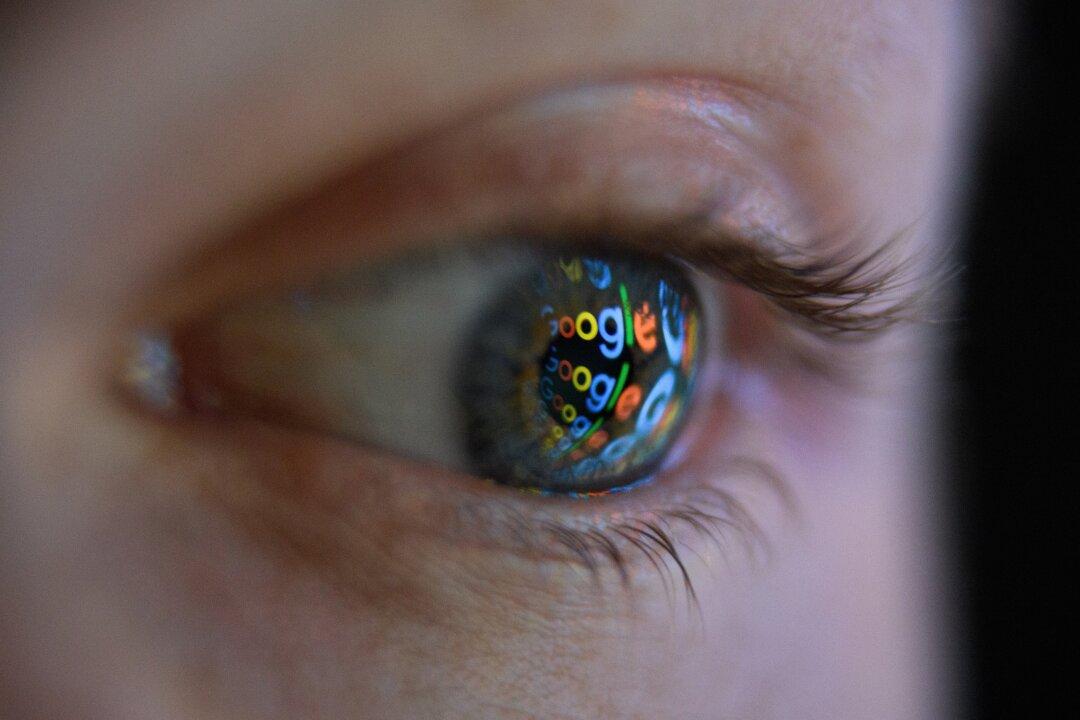

Google’s personalized search results are isolating people in bubbles of their own political preferences, making it harder for voters to make informed decisions on contentious issues, according to a study by DuckDuckGo, a company that runs the privacy-oriented search engine DuckDuckGo.com.

The company had 87 volunteers across the country conduct the same series of searches on Google within about one hour. As expected, nearly all were shown significantly different results.