A graduate student in Michigan is questioning the repercussions of artificial intelligence (AI) technology, after putting its capabilities to the test and receiving a shocking output in response.

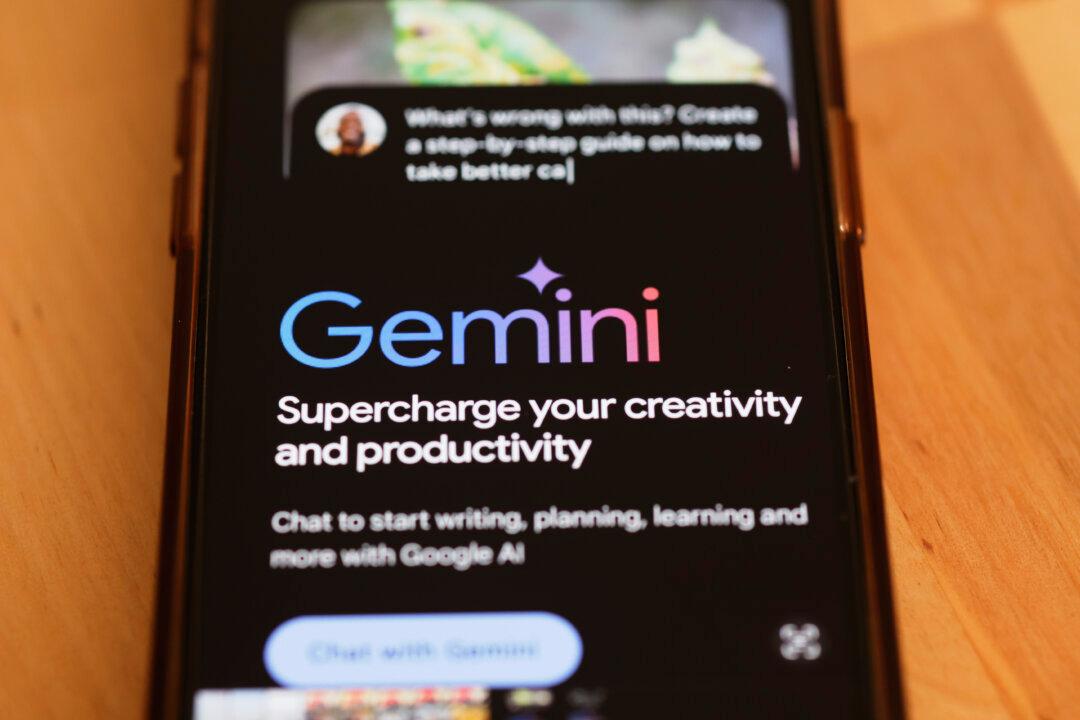

Vidhay Reddy, a 29-year-old college student, recently received an alarming response from Google’s AI chatbot, “Gemini,” after asking a homework-related question about aging adults.