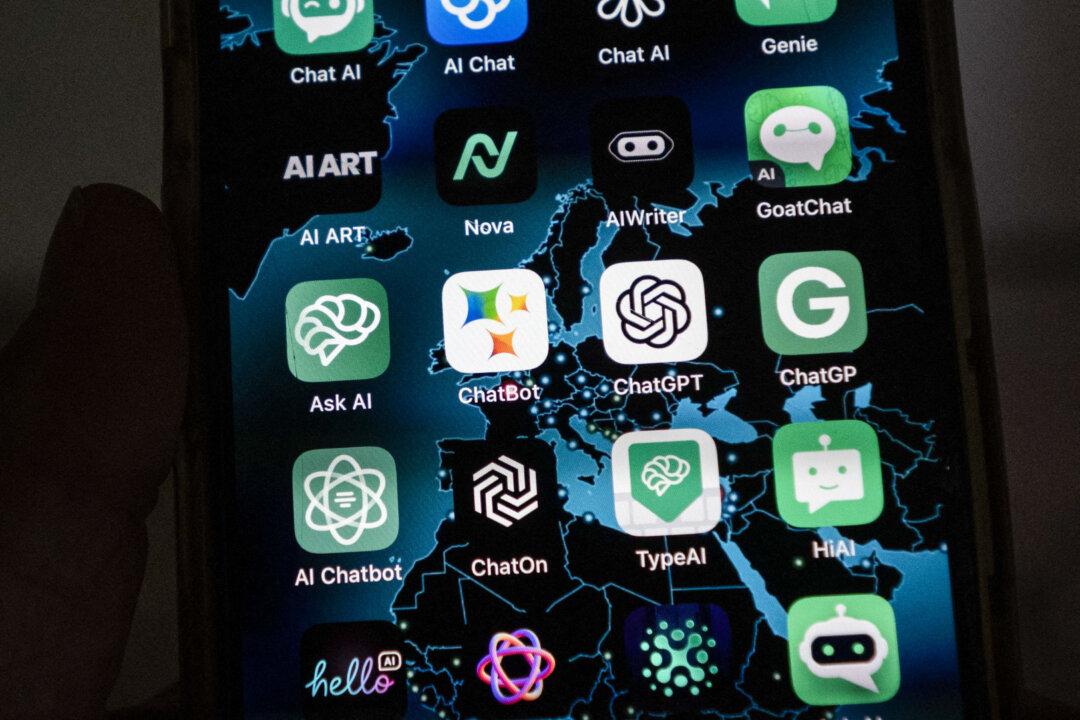

Sen. Richard Blumenthal (D-Ct.) called for strong regulatory control over artificial intelligence (AI) during a Senate hearing on July 25, citing the grave potential dangers of the “scary” technology.

Though AI can do “enormous good” like curing diseases and improving workplace efficiency, what catches people’s attention is the “science fiction image of an intelligent device out of control, autonomous, self-replicating, potentially creating disease, pandemic-grade viruses, or other kinds of evils, purposely engineered by people or simply the result of mistakes not malign intention,” Mr. Blumenthal said during a July 25 hearing of the Senate subcommittee on privacy, technology, and the law. “We need some kind of regulatory agency.”