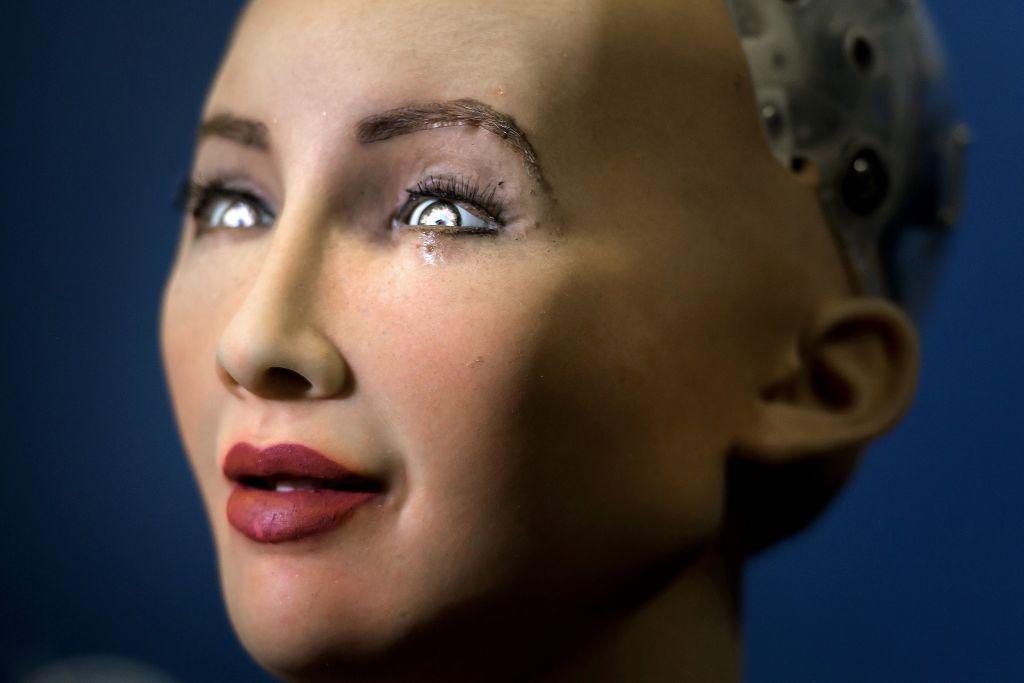

OpenAI’s artificial intelligence system GPT-4 has shown “sparks of artificial general intelligence (AGI),” displaying abilities in a wide range of knowledge domains with a performance that is almost at the “human-level,” according to a paper by Microsoft Research.

An AGI would be able to understand the world as human beings do and have a similar capacity to learn how to carry out various tasks. An early version of GPT-4 tested by Microsoft researchers showed “more general intelligence than previous AI models,” according to the March 22 paper (pdf). “Beyond its mastery of language, GPT-4 can solve novel and difficult tasks that span mathematics, coding, vision, medicine, law, psychology, and more, without needing any special prompting. Moreover, in all of these tasks, GPT-4’s performance is strikingly close to human-level performance, and often vastly surpasses prior models such as ChatGPT.”