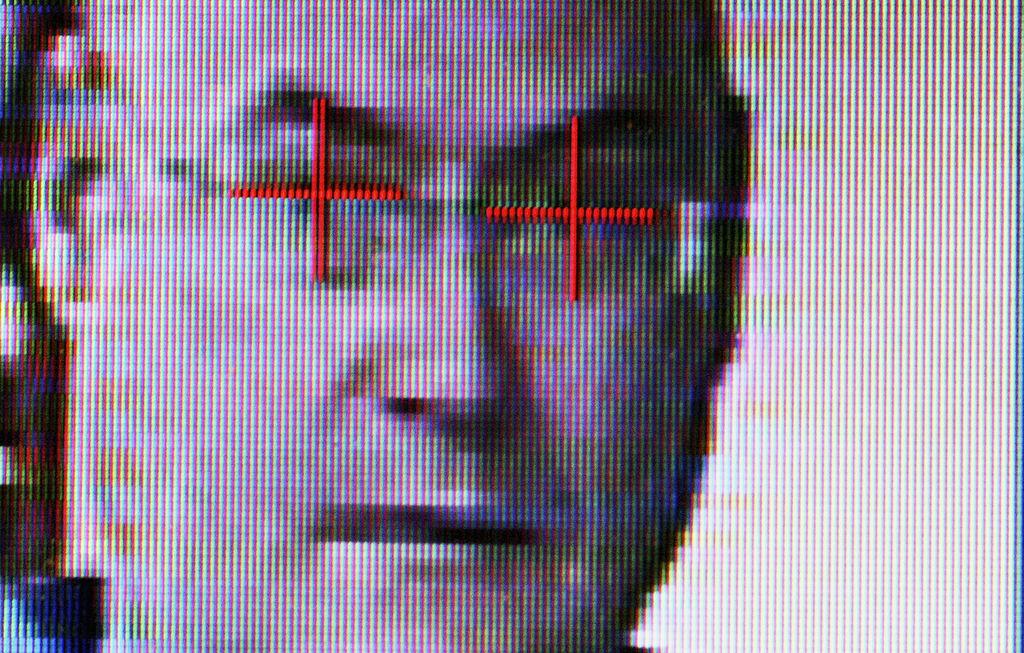

Biometric information like facial recognition, fingerprints, and voice and eye scans being collected by businesses can be hacked and manipulated, with the threat amplified following the proliferation of artificial intelligence, said a recent warning by the U.S. Federal Trade Commission (FTC).

In a May 18 policy statement (pdf), the FTC said that consumers are facing increasing risks associated with the use and collection of biometric information, including those powered by AI technologies. Biometric information includes data that denote the physical, biological, or behavioral traits or measurements of individuals. Facial recognition, fingerprint recognition, iris recognition, and voice recognition are some of the technologies prevalent today.