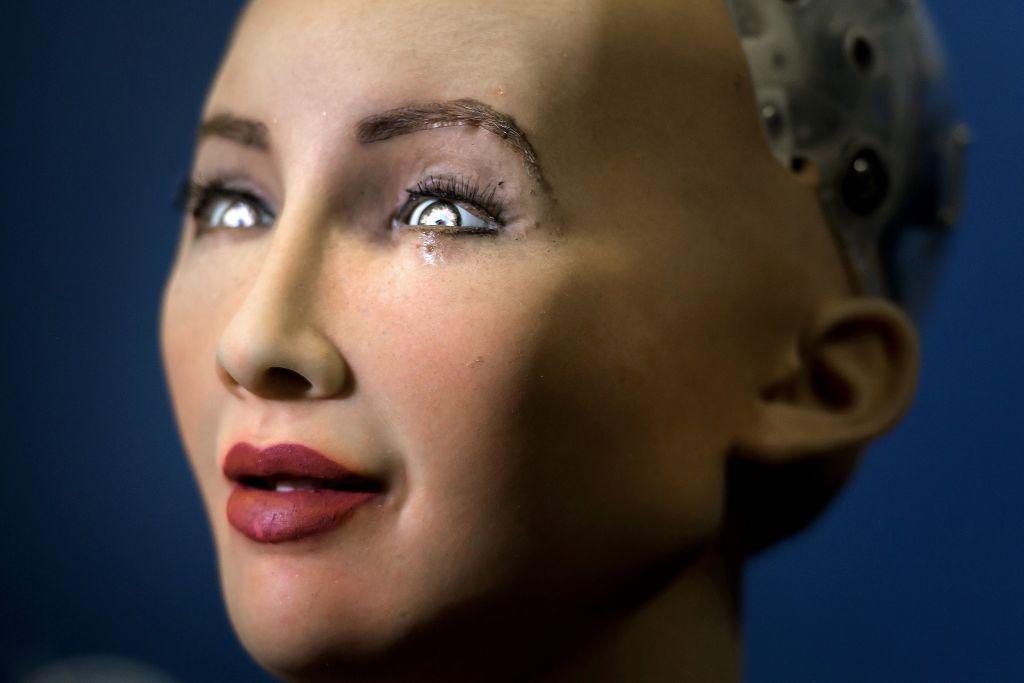

The U.S. Food and Drug Administration (FDA) has shed light on the possibility of using artificial intelligence (AI) and machine language (ML) in the drug development process, pointing to the benefits these technologies bring, such as digital versions of human patients.

“AI/ML’s growth in data volume and complexity, combined with cutting-edge computing power and methodological advancements, have the potential to transform how stakeholders develop, manufacture, use, and evaluate therapies. Ultimately, AI/ML can help bring safe, effective, and high-quality treatments to patients faster,” the FDA said in a May 10 post. A subset of AI, machine learning is the development of computers to learn and adapt using algorithms and models, without explicit instructions, to imitate human learning.