Commentary

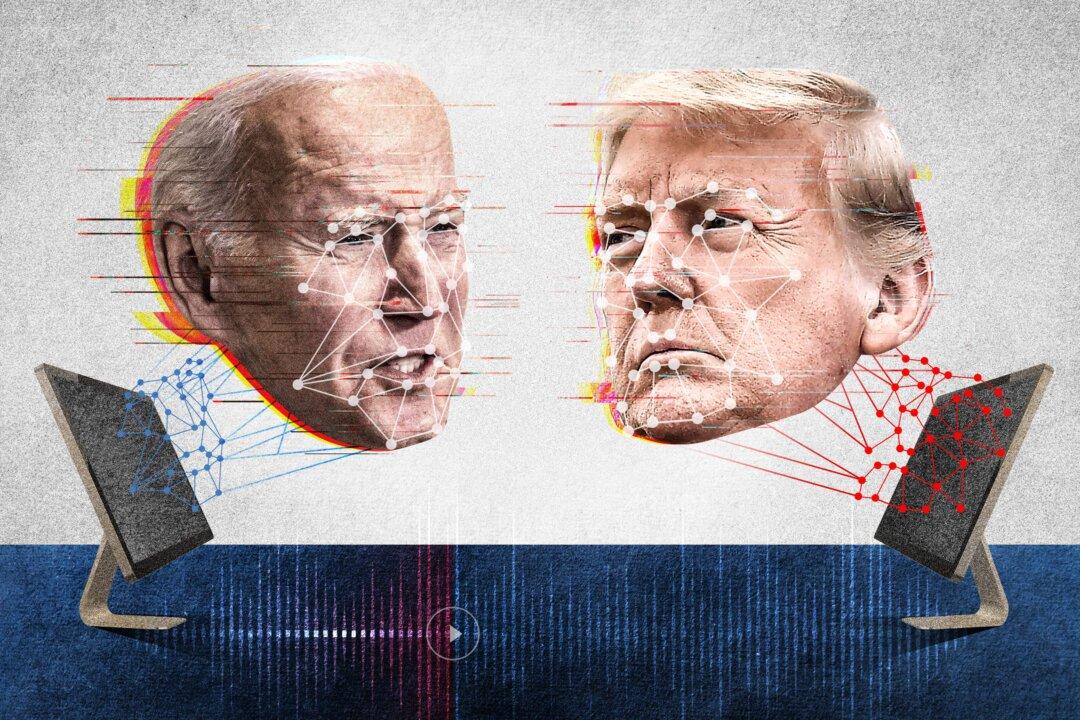

In 15 minutes of techno-evolutionary time, artificial intelligence-powered systems will threaten our civilization. Yesterday, I didn’t think so; I do now.

In 15 minutes of techno-evolutionary time, artificial intelligence-powered systems will threaten our civilization. Yesterday, I didn’t think so; I do now.