Commentary

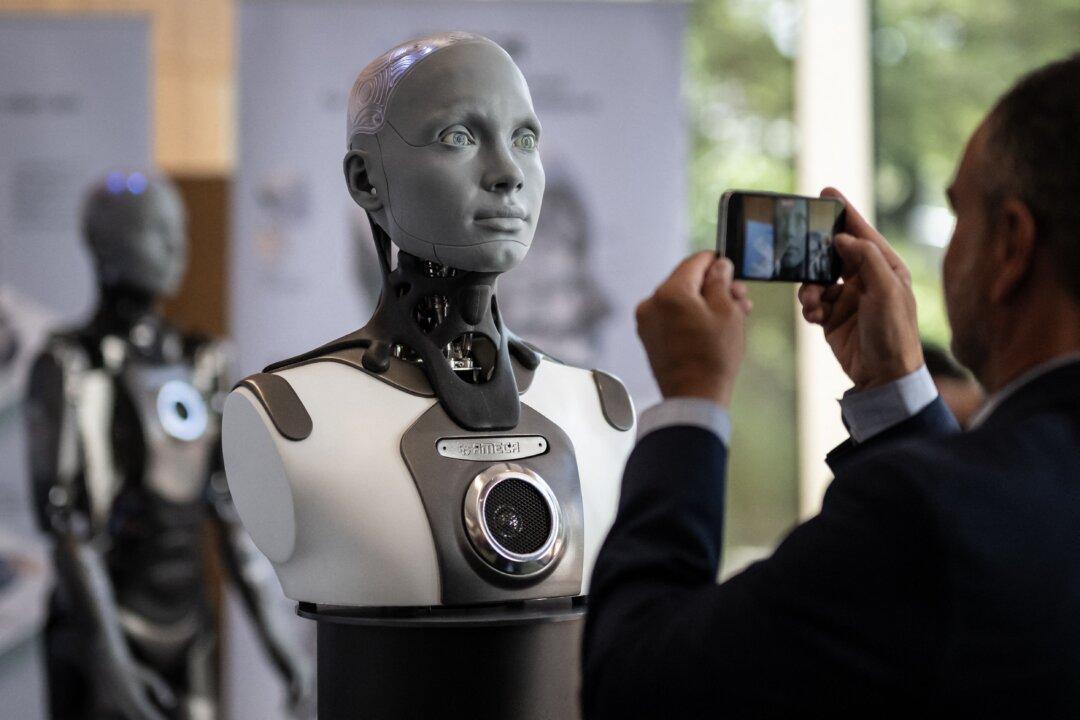

Concerns about machines or unchecked technological advances bringing disaster to humanity have persisted for more than a century. Many apocalyptic movies feature themes related to artificial intelligence (AI) systems.

Concerns about machines or unchecked technological advances bringing disaster to humanity have persisted for more than a century. Many apocalyptic movies feature themes related to artificial intelligence (AI) systems.