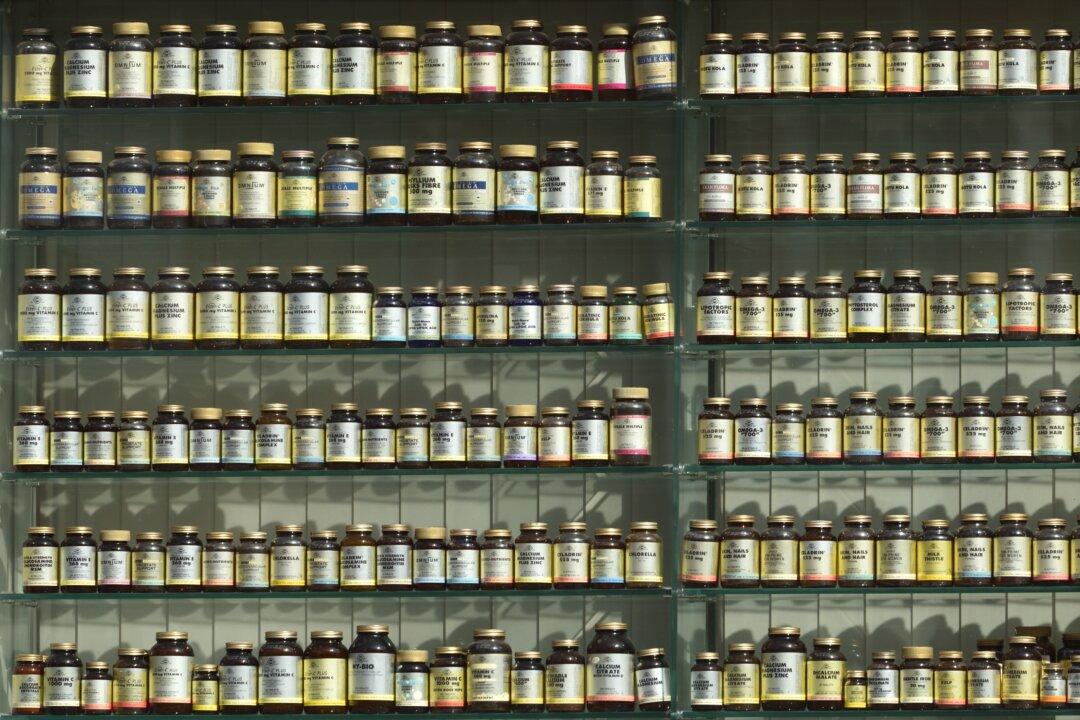

Taking vitamin supplements have no health benefit and some may even be harmful, a study published in the journal Annals of Internal Medicine reported on April 9.

The researchers from Tufts University in Massachusetts, who analysed the medical records of almost 31,000 adults aged 20 and above in the United States, found that taking vitamins in pill form had no significant positive impact on health and that benefits were only seen when nutrients were absorbed from food.