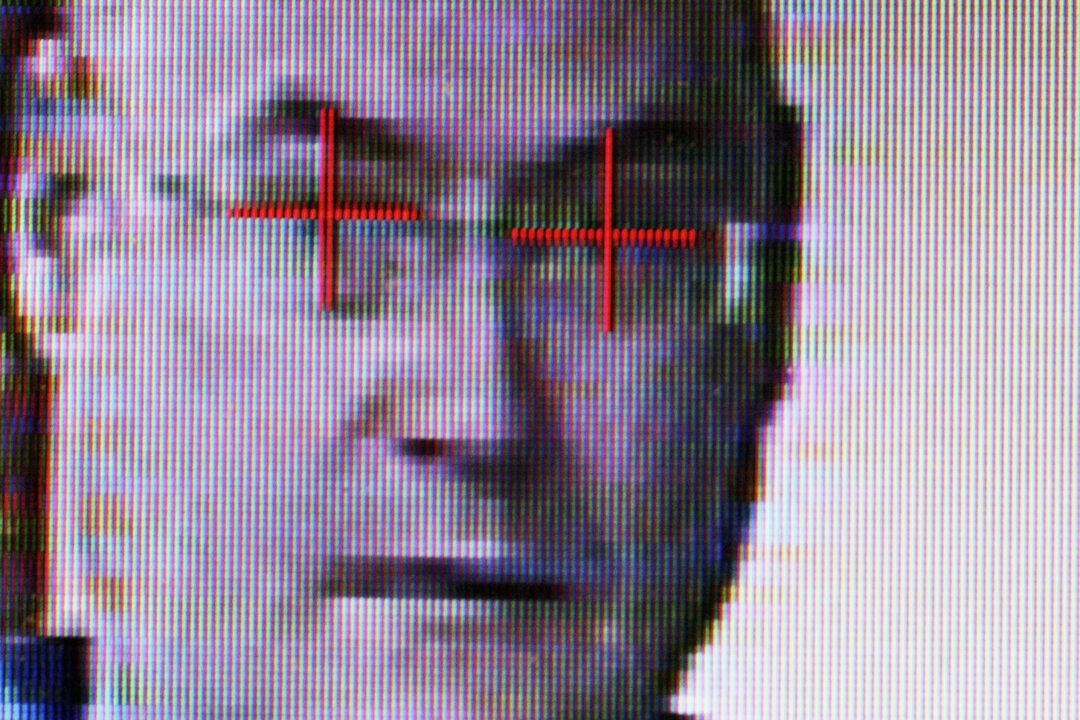

Microsoft has announced it will provide the Australian New South Wales (NSW) Police force with its object recognition technology to speed up the state’s surveillance footage analysis.

The state police’s older systems involved CCTV footage—and other forms of evidence required in investigations—stored on servers locally, which required time-consuming manual review from police.