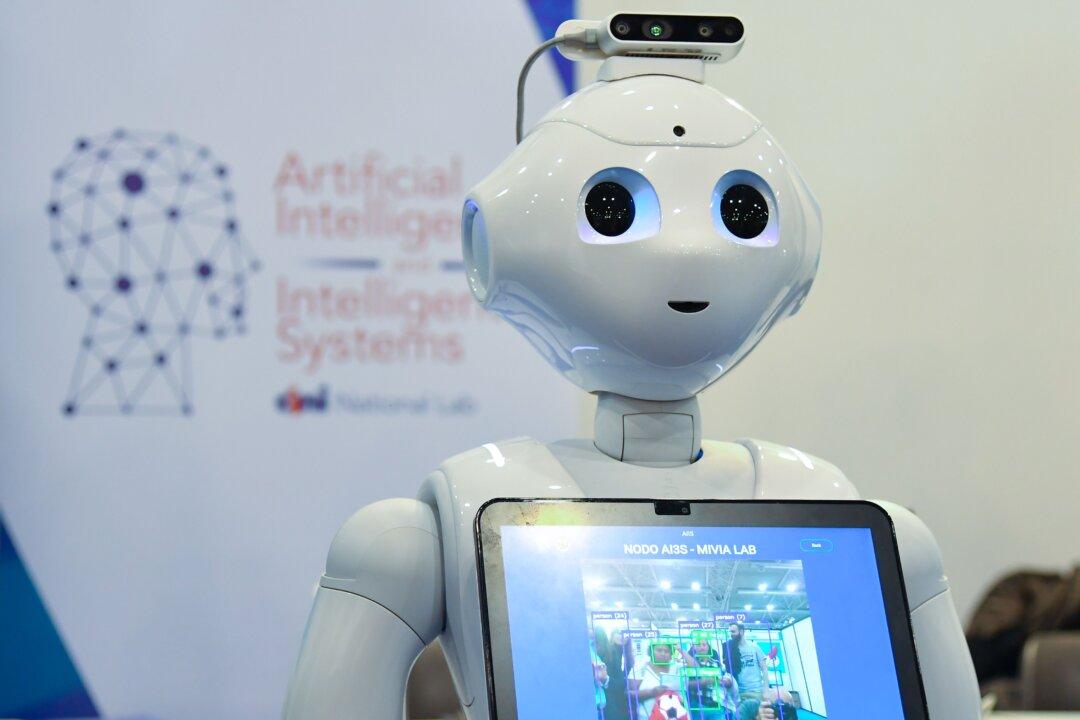

A Google engineer has been suspended after raising concerns about an artificial intelligence (AI) program he and a collaborator are testing, which he believes behaves like a human “child.”

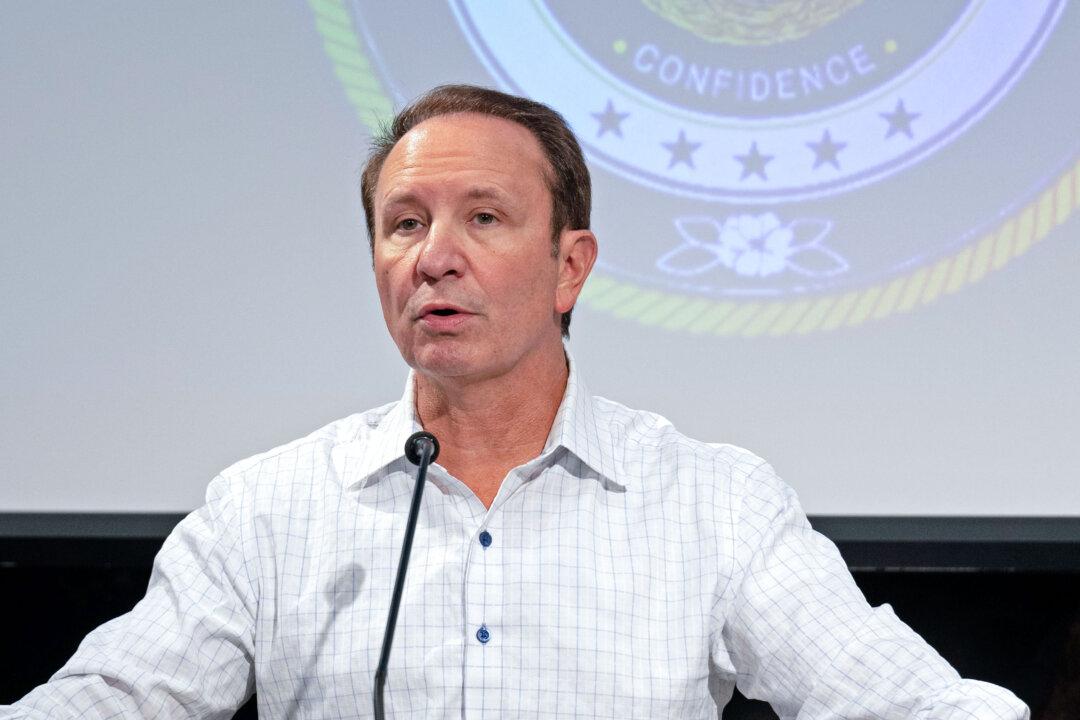

Google put one of its senior software engineers in its Responsible AI ethics group, Blake Lemoine, on paid administrative leave on June 6 for breaching “confidentiality policies” after the engineer raised concerns to Google’s upper leadership about what he described as the human-like behavior of the AI program he was testing, according to Lemoine’s blogpost in early June.