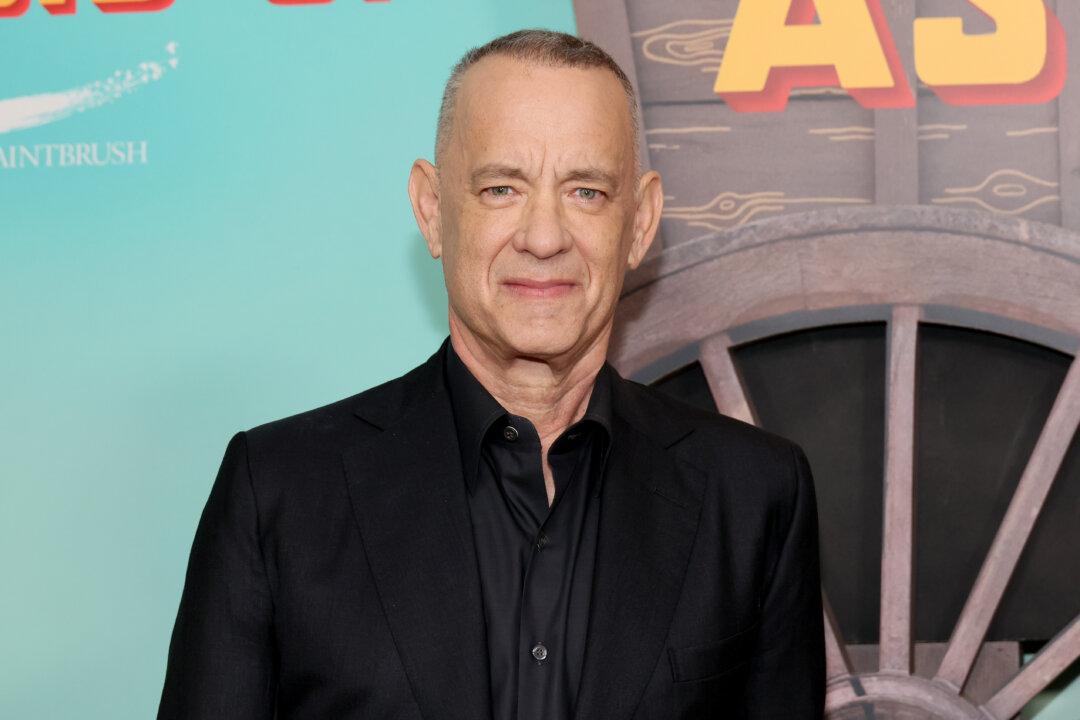

Actor Tom Hanks’s voice is allegedly being used in a fraudulent manner to promote pharmaceuticals on social media and tech, and legal experts say there’s not much he or any other celebrity can do about it.

That’s because there aren’t sufficient federal and state laws regulating the use of AI to replicate the voice or likenesses of public figures, according to GPTZero CEO Edward Tian.