The development of “killer robots” is a new and original way of using human intelligence for perverse means. Humans creating machines to kill and destroy on a scale not yet imagined is a concept that not even George Orwell could have imagined. In the meantime, the leading world powers continue their un-merry-go-round of destruction and death—mostly of innocent civilians—without stopping to consider the consequences of their actions.

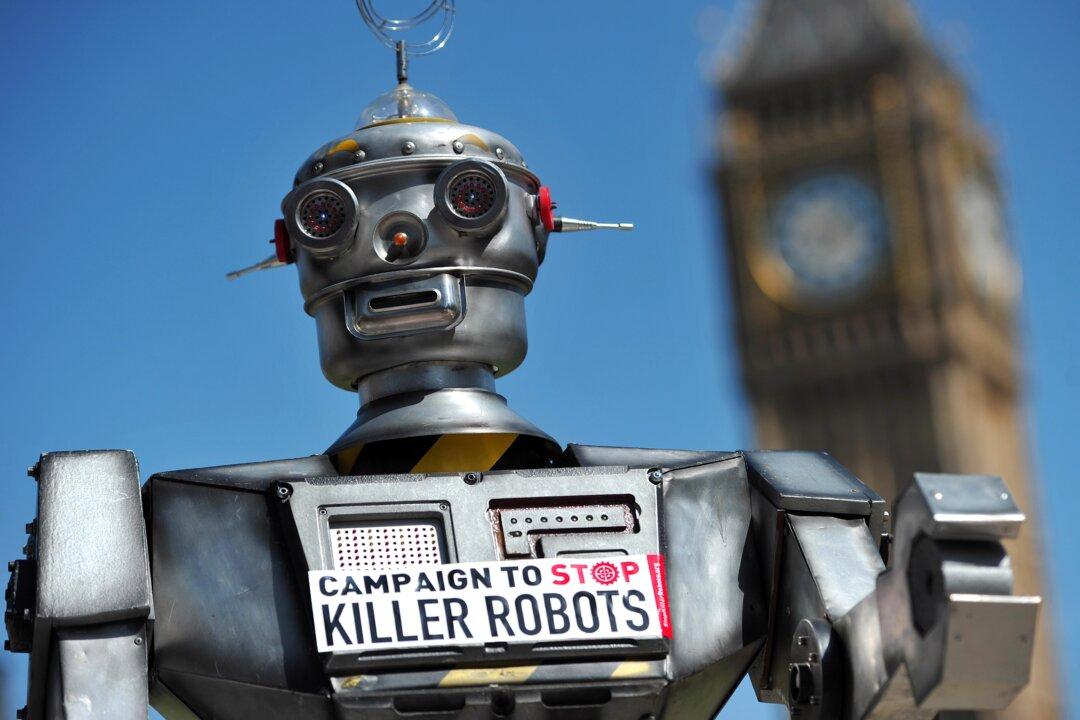

Killer robots are fully autonomous weapons that can identify, select, and engage targets without meaningful human control. Although fully developed weapons of this kind do not yet exist, the world leaders such as the United States, the U.K, Israel, Russia, China, and South Korea are already working on creating their precursors.

The U.S. Government Accountability Office reports that in 2012, 76 countries had some kind of drones, and 16 countries already possessed armed ones. The U.S. Department of Defense spends $6 billion every year on the research and development of better drones.