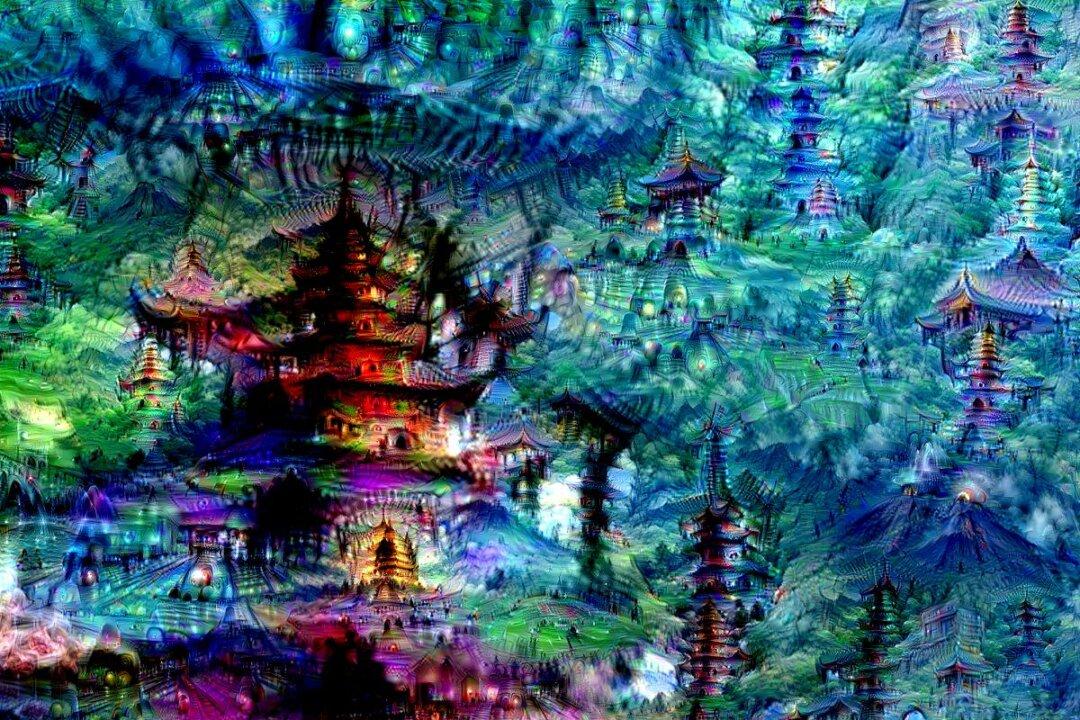

Last week, June 17, Google’s Research blog released an incredible series of computer-generated images, dubbed the “dreams” of the machine.

The surrealist landscapes were created by neural network computers—machines designed to emulate what scientists believe to be the biological structure of the human mind—that were in the process of training their visual pattern-recognition abilities.

For instance, to teach the computer how to recognize a banana, the researchers feed the machine with millions of training examples, allowing it to formulate its own criterion for what constitutes a banana, then gradually they adjust the algorithm to improve its recognition abilities.

Then, to test the minimal threshold of visual patterns needed for the computer to “see” a banana, so as to better understand how the computer “sees” things, an image of random noise is slowly adjusted until the machine registers a banana.