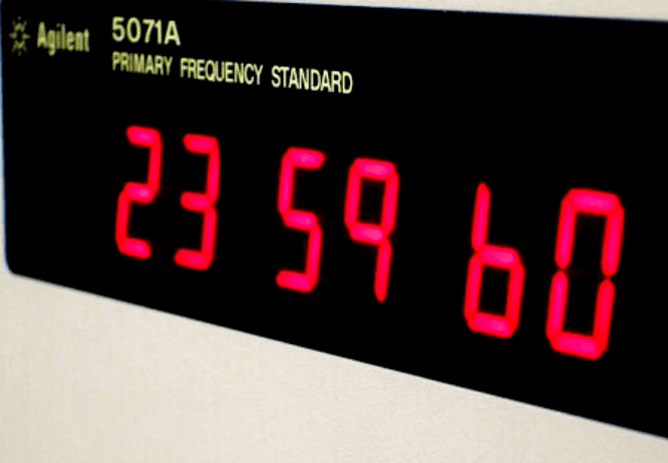

Most people would feel they can count on one day comprising the same number of hours, minutes and seconds as the next. But this isn’t always the case – June 30 will be a second longer in 2015 with the addition of a leap second, added to reconcile the differences between two definitions of time: one astronomical, the other provided by atomic clocks.

Before the 1950s, time was defined by the position of the sun in the sky, as measured by instruments that monitor the Earth’s rotation. But this rotation is not constant. It is slowing due to the gravitational pull of the moon, with days lengthening by 1.7 milliseconds per century.

The varying length of the day has been known for centuries but only became a practical concern (outside astronomy) with the invention of atomic clocks in the 1950s. These provide a far more stable and easy-to-use definition of time, based on a particular microwave frequency absorbed by caesium atoms. Atomic clock signals were soon used to control standard-frequency radio transmitters, which telecommunication engineers could use to calibrate and synchronise equipment.